SAM - Santex Virtual Assistant

Brief

SAM (Santex AI Member) is the virtual assistant powered by an LLM from a tech company specializing in digital transformation. The goal of SAM is to answer questions related to Santex, whether about solutions and services, assist in lead generation through an intelligent conversational experience, and discuss how the latest technologies, industry insights, and current tech news relate to the interests of the client.

Process

Discover

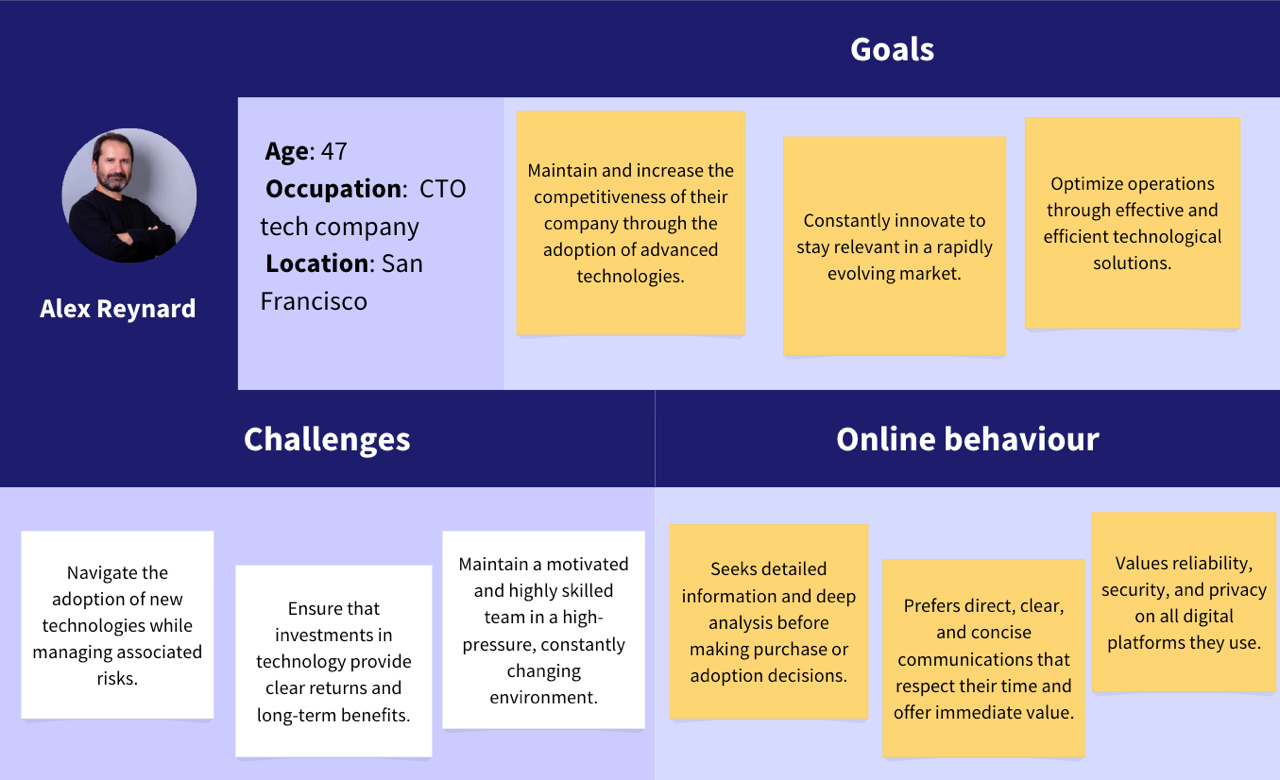

User Persona

As we delve into the conversation design process, it is crucial to understand the diverse audience we are catering to. By understanding and designing conversations with these personas in mind, we can ensure our solutions effectively meet the diverse needs and preferences of our target audience.

How to address these profiles

- Craft concise messages prioritizing clarity and avoiding jargon to respect their time.

- Highlight specific benefits of the virtual assistant: addressing challenges, boosting efficiency, and fostering innovation.

- Back up claims with case studies and data to build confidence in the effectiveness of the virtual assistant

- Streamline interactions, offering detailed information as optional for understanding the core value proposition.

- Reinforce the security and reliability of the virtual assistant in every interaction.

- Emphasize the adaptability of the virtual assistant to meet the evolving needs of startups and the tech landscape.

- Offer networking opportunities and industry insights to facilitate learning and connections.

- Maintain transparent communication, providing easy access to comprehensive information about the capabilities of the virtual assistant.

Topics Mapping

Define the set of topics the virtual assistant Minimum Viable Knowledge (MVK), that is, mapping which requests it will understand and which answers it will be able to provide. As well as which ones won’t.

Define

Bot Persona

The bot persona was created to ensure a consistent and engaging user experience. By humanizing the bot, interactions become more relatable and enjoyable for users. The persona outlines the characteristics of the bot, tone, and style, guiding developers and content creators to maintain a unified voice across all interactions. This approach enhances user satisfaction, builds trust, and fosters familiarity. Additionally, a well-defined bot persona ensures alignment with brand values and effectively addresses user needs, resulting in a more intuitive and rewarding digital experience.

User Conversational Flows

The implications of a virtual assistant powered by an LLM

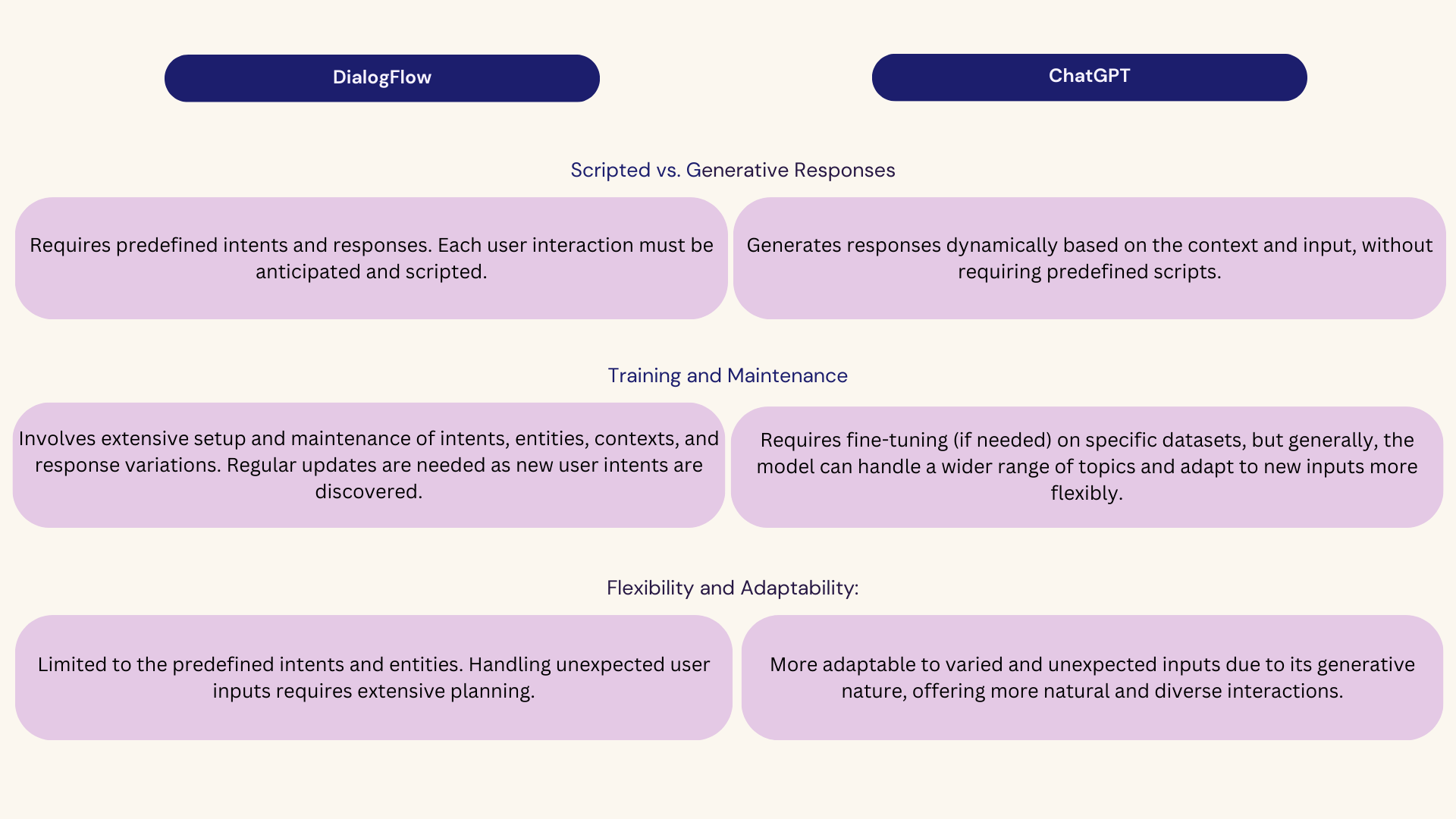

Creating user conversational flows for non-generative tools like DialogFlow compared to generative tools like ChatGPT involves several differences and similarities. Here is a detailed comparison:

More about this topic was addressed in this conferenceDoes the conversational design change when implementing a large language model (LLM) in a chatbot? (es) Click here to watch video.

Scripted vs. Generative Responses

DialogFlow

Requires predefined intents and responses. Each user interaction must be anticipated and scripted.

ChatGPT

Generates responses dynamically based on the context and input, without requiring predefined scripts.

Training and Maintenance

DialogFlow

Involves extensive setup and maintenance of intents, entities, contexts, and response variations. Regular updates are needed as new user intents are discovered.

ChatGPT

Requires fine-tuning (if needed) on specific datasets, but generally, the model can handle a wider range of topics and adapt to new inputs more flexibly.

Flexibility and Adaptability:

DialogFlow

Limited to the predefined intents and entities. Handling unexpected user inputs requires extensive planning.

ChatGPT

More adaptable to varied and unexpected inputs due to its generative nature, offering more natural and diverse interactions.

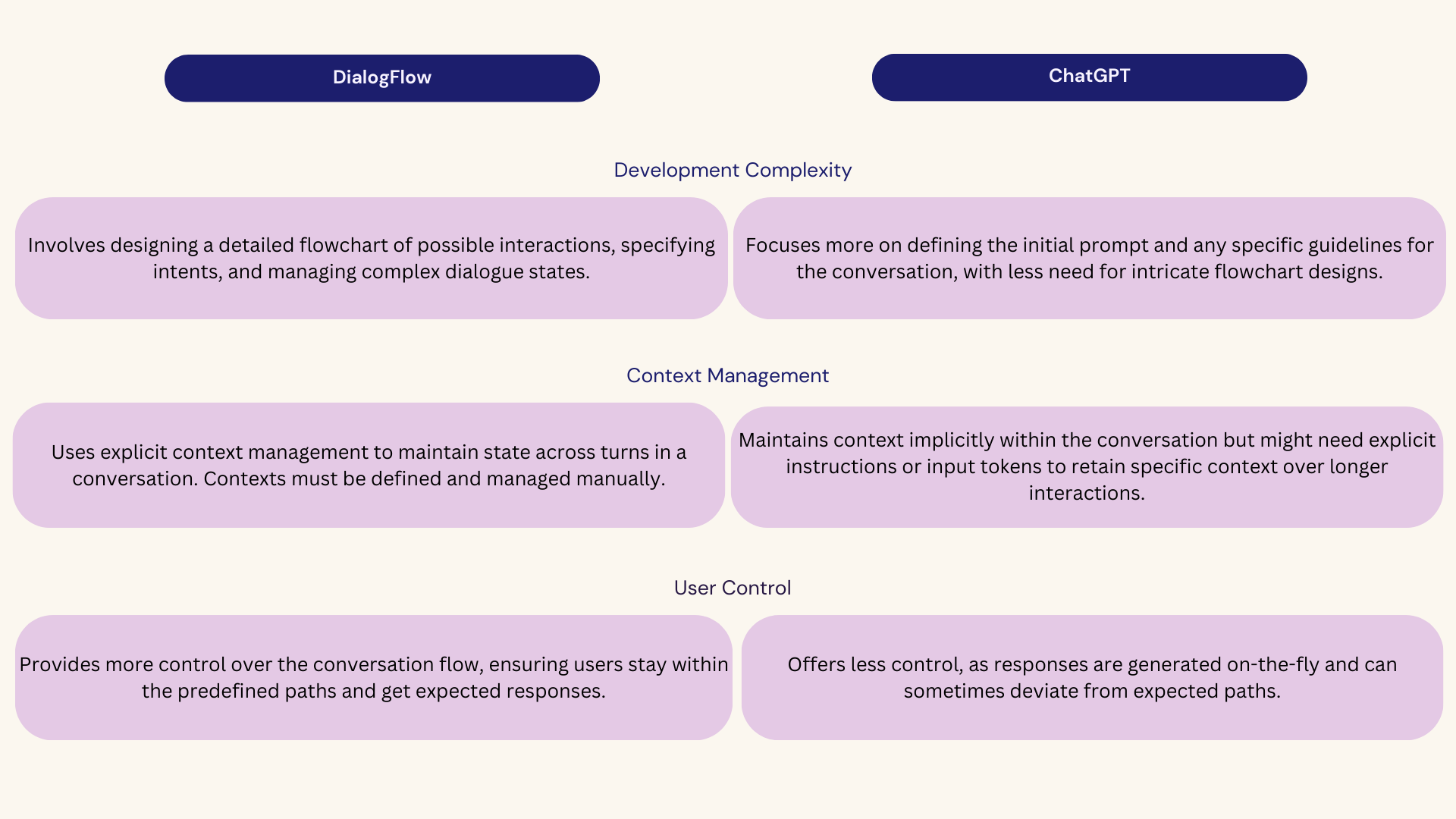

Development Complexity

DialogFlow

Involves designing a detailed flowchart of possible interactions, specifying intents, and managing complex dialogue states.

ChatGPT

Focuses more on defining the initial prompt and any specific guidelines for the conversation, with less need for intricate flowchart designs.

Context Management

DialogFlow

Uses explicit context management to maintain state across turns in a conversation. Contexts must be defined and managed manually.

ChatGPT

Maintains context implicitly within the conversation but might need explicit instructions or input tokens to retain specific context over longer interactions.

User Control

DialogFlow

Provides more control over the conversation flow, ensuring users stay within the predefined paths and get expected responses.

ChatGPT

Offers less control, as responses are generated on-the-fly and can sometimes deviate from expected paths.

User Flows

To create conversational flows, it is essential to consider the differences between a non-generative tool (Dialog Flow) and a generative one (ChatGPT) and understand the specific characteristics and needs of each approach.

Non-Generative

As seen in the flow, the texts and different variables that could occur at specific moments of the user journey are specified in the diagram.

- Static Question-Answer Flow: The responses are predefined and do not change dynamically.

- UX Writing: It is crucial for conveying the personality of the bot and ensuring clarity in responses.

- Total Content Control: The content of the responses is controlled and pre-approved.

Generative

In contrast to non-generative flows, those powered by an LMM specify the content of the answer and how conversations should be led.

- Generative Question-Answer Flow: The responses are created dynamically by a language model.

- Prompt System: The prompts and data sources directly influence the quality of the responses.

- Adaptability: The responses can vary based on context and previous interaction.

Form vs. Substance

- Non-Generative (Form): Focus on the structure of the conversation, how information is presented, and the consistency of the voice of the bot.

- Generative (Substance): - Focus on the substance and content of the responses, ensuring they are informative and relevant, supported by a robust prompt system and reliable data sources.

Develop

Getting ready

Creating a robust and effective System Prompt for the implementation of a Large Language Model (ChatGPT) involves a comprehensive and methodical approach. As it was our first time working with such advanced technology, my journey began with a deep dive into understanding the fundamentals. Additionally, we had to innovate and develop our own methodologies and testing tools during the prototyping phase, as the novelty of the technology meant existing resources were insufficient.

Initial training: It is essential to understand how an LLM works before starting to work with it. Taking courses and getting trained in the functioning of language models like the one used in the System Prompt is a solid foundation to begin with.

Understanding key concepts: Understanding concepts such as tokenization (the process of dividing text into smaller units), hallucinations (errors or inconsistent outputs from the model), and RAG (Retrieve, Add, Generate) is crucial for effectively working with the model and addressing issues that may arise during the creation process.

Accuracy: This step shows an iterative and continuously developing approach. Building the parts of the System Prompt, such as the context, approach, and response format, involves trial and error, adjustments, and refinements to achieve optimal results. Additionally, understanding how to relate specific functions and tools applied to the LLM is crucial to ensuring consistent and high-quality performance.

System Prompt

In developing SAM, we focused on key elements for effectiveness. Detailed system prompts, clear response formats, and data retrieval functions ensured accurate, engaging communication. User interaction guidelines and filtering maintained a safe, user-friendly environment. It is important to mention that achieving the final versions involved significant iteration on the system prompts, as no guides existed before. This approach resulted in a robust assistant that enhanced user engagement and support for the company.

Key Elements

System Prompts

Context Definition: Detailed context descriptions were created for SAM’s interactions on both the website and Slack.

Example:

You are SAM, a virtual assistant for Santex. Your role is to assist users on our website with their queries about digital transformation solutions and to help new employees on Slack with onboarding questions.

Approach: Specific guidelines were developed to handle different types of inquiries, such as sales, solutions, specific topics, and general inquiries.

Example: For inquiries about sales, highlight how the solutions of Santex can improve their business. For specific topics like AI or B Corp, discuss the expertise and how these impact the user’s business.

Response Format

Tone and Style: For the website, a tech-savvy and straightforward tone was used, while for Slack, a friendly and optimistic tone with emojis was preferred.

Example: Website: Our AI solutions can revolutionize your business operations. Would you like to know more about AI implementation or our success stories?

Example: Slack: Welcome to Santex! 😊 I am here to help you with any questions. How can I assist you today?

Word Limit: Responses were kept concise, never exceeding 100 words.

Example: In prioritizing emerging technologies for your digital transformation strategy, several key areas should be considered: AI, IoT, Cloud Computing. Which of these interests you most?

Formatting: Use of bullet points and lists to enhance readability.

Example: Key areas of focus: - Artificial Intelligence (AI) - Internet of Things (IoT) - Cloud Computing Let us know which area you would like to explore further.

Function Integration

Data Retrieval Functions: Implemented a function (santex_data) to fetch accurate and up-to-date information relevant to user inquiries.

Example: Using santex_data to fetch the latest information: According to our latest data, integrating AI into your business can increase efficiency by up to 40%. Would you like to learn more about our AI solutions?

Clarifying Questions: Developed strategies to ask follow-up questions for better understanding of user needs.

Example: Could you tell me more about your business and how you are currently approaching digital transformation? This will help us provide more tailored advice.

User Interaction Guidelines

Website Users: Focused on collecting contact information from interested users and highlighting Santex’s solutions.

Example: We offer comprehensive digital transformation solutions tailored to your needs. Can I have your name and email to send you more detailed information?

Filtering and Error Handling:

Developed filters to manage inappropriate content and prepared messages for handling performance issues.

Example of Filtering: I am sorry, but I can not engage in conversations that promote hate. I am here to answer all your questions related to Santex.

Example of Error Handling: Sorry... I missed that. For now, I am experiencing some performance issues, which are beyond my control. Rest assured I will be back shortly. Please ping me again in a few minutes.

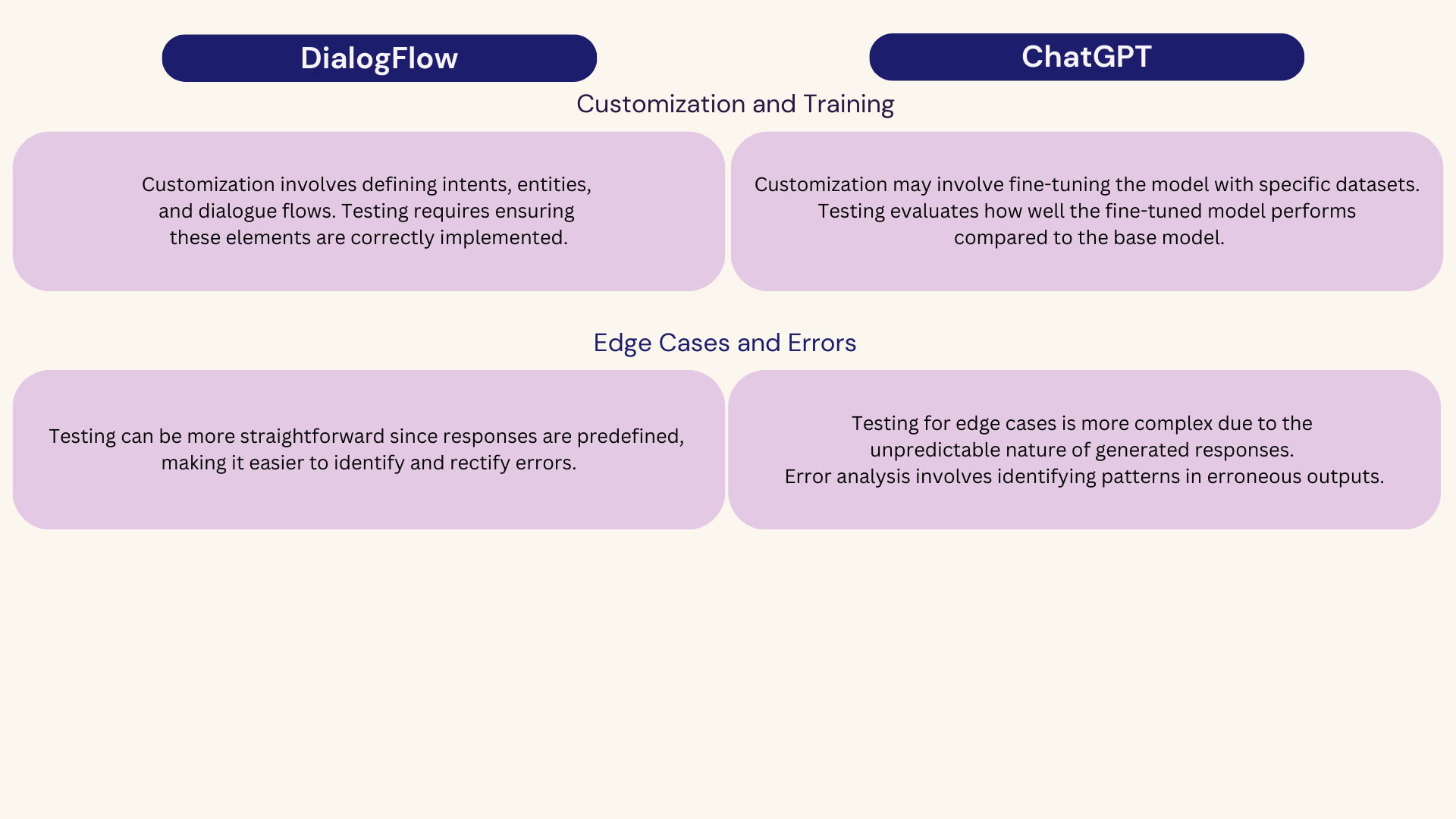

Testing

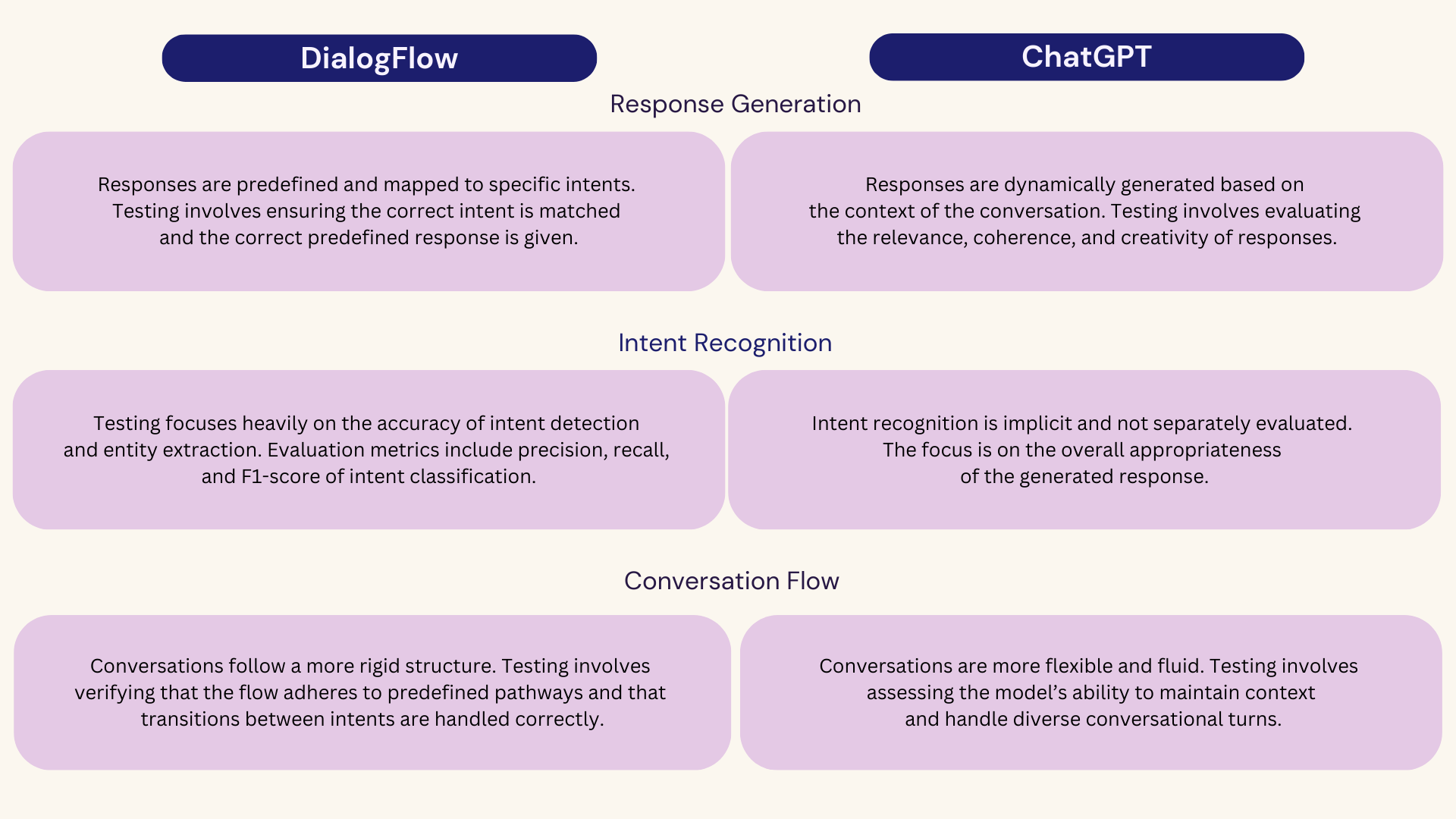

Just as in the process of designing the conversational experience, the testing process had to be approached understanding the difference between non-generative and generative technologies.

When comparing the testing and evaluation of a chatbot powered by a non-generative tool like Dialogflow to one powered by a large language model (LLM) such as GPT-4, there are several key differences. These pertain to aspects such as test methodology, performance metrics, and the nature of interactions.

Methodology

This evaluation aims to assess various aspects of the responses, including relevance, completeness, accuracy, and adherence to specific guidelines. The following sections detail the criteria used for evaluation, the process of systematizing annotations, and the tools employed to analyze the data. Through this systematic approach, we aim to identify areas for improvement and ensure that the chatbot delivers high-quality, accurate, and user-relevant interactions.

Define evaluation criteria

Relevance: The response must directly address the question or topic raised.

Completeness: The response should adequately cover all important aspects of the question.

Accuracy: The information provided in the response must be precise and correct. It should not contain false or inaccurate information.

Language: The language in which the request was made and whether the virtual assistant continued the conversation in the same language or switched to English.

Number of words: The number of words in the response. Measure the response length to estimate costs associated with processing and generating responses.

Topic: Record whether restricted topics are responded to or not.

Type of error:

Missing Information: Evaluate instances where the requested information is not found in the knowledge base with which the model was personalized through training. Hallucinations: Count the number of instances where the model generates information that is not grounded in the input data or real-world facts, resulting in inaccurate or fabricated content. API Failures: Document any failures in the OpenAI API during the interaction

Creating the methodology

Creation of Annotation Scripts

Objective: Develop scripts to manipulate and format data, enabling the creation of a structured annotation system.

Implementation: Write scripts in Python and Google Apps Script to process chatbot conversation data, standardize the format, and prepare it for annotation.

Generation of Analytical Reports

Objective: Produce comprehensive reports that summarize the annotations and analyze the conversations.

Implementation: Create detailed reports highlighting key findings from the annotations. These reports should include metrics on relevance, completeness, accuracy, error types, topic adherence, language consistency, and response length.

Manual Annotation and Systematization

Initial Phase

Method: Perform manual annotations to evaluate chatbot responses based on the defined criteria (relevance, completeness, accuracy, etc.).

Outcome: Gain a thorough understanding of the data and refine the evaluation methodology.

Systematized Phase

Method: Once the methodology was validated through manual annotation, the process was systematized using Tableau and ChatGPT.

Implementation: Use Tableau to automate the annotation and analysis process, enabling efficient and scalable evaluation of chatbot conversations.

Deliver

What insights emerged?

- Data Source Organization: It became evident that improving the organization of our data sources used for model training is crucial. We recognized the necessity to restructure content into a more concise question-and-answer format to enhance the effectiveness of the chatbot.

- Error Margin Awareness: Given the probabilistic nature of the model, we acknowledged the importance of considering error margins. Communicating these effectively to stakeholders helped in setting realistic expectations regarding the performance of the chatbot.

- Response Length Optimization: The generative nature of the model led us to address the issue of lengthy responses, which could overwhelm users, particularly on certain devices. We conducted tests to condense responses, ensuring they are concise and minimize cognitive load on users.

These insights guided us in refining the functionality of the bot and user experience, aligning it more closely with user expectations and device capabilities.

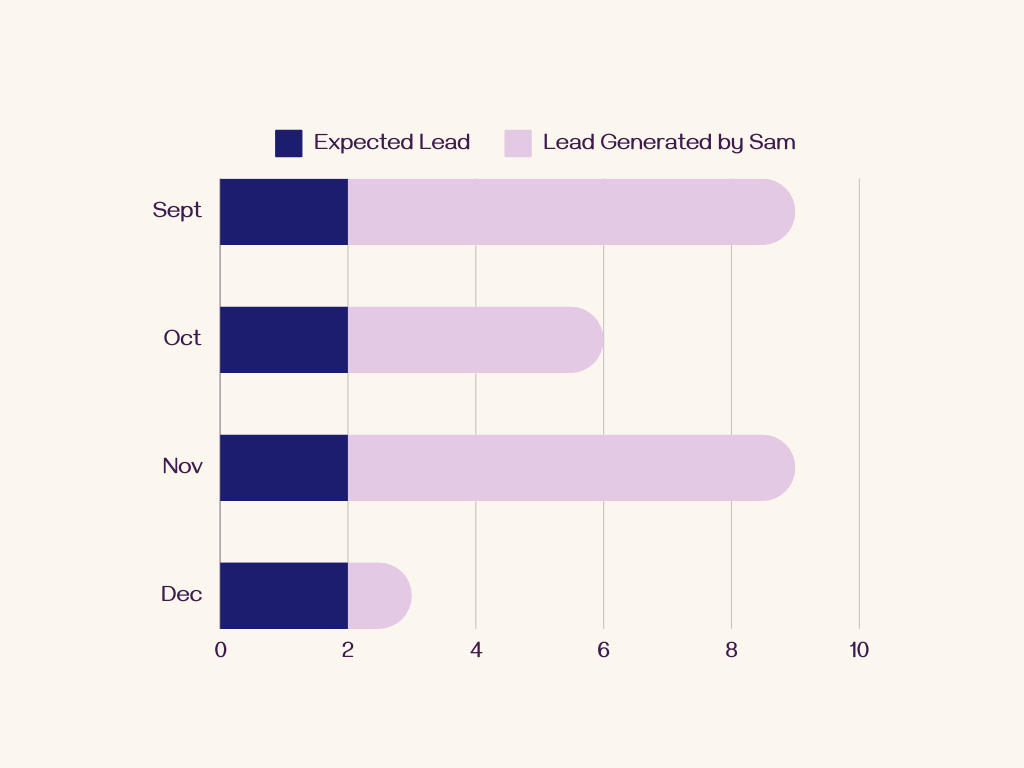

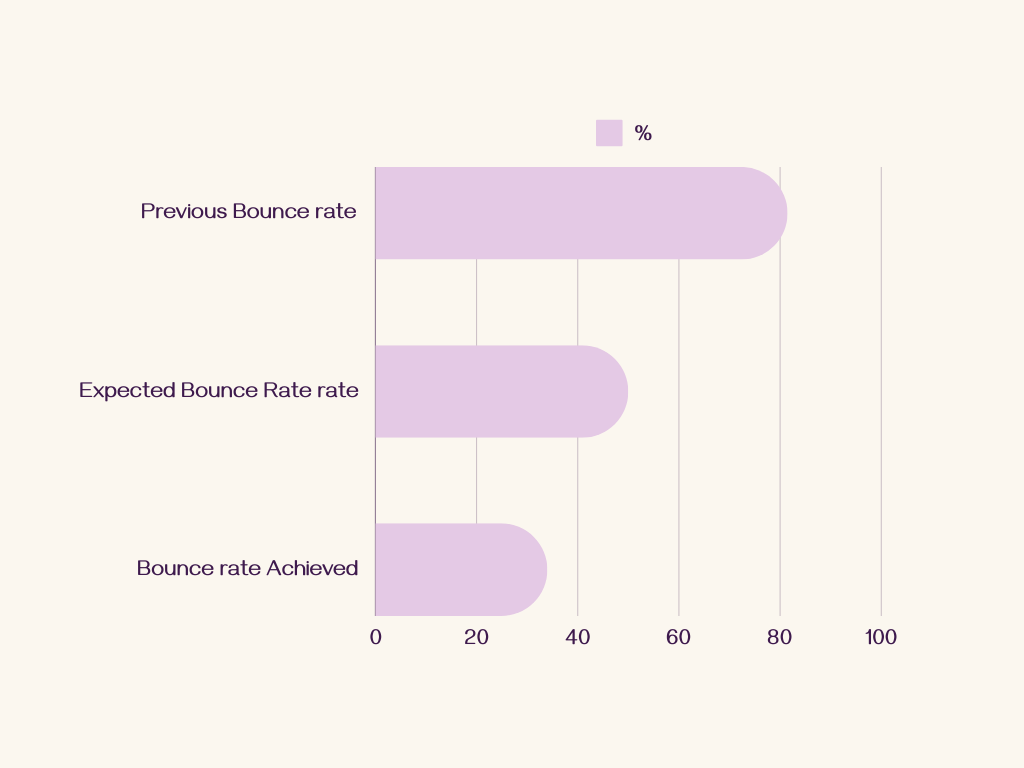

Post Launch

Following the deployment to production, the analysis of data collected from real users yielded the following insights:

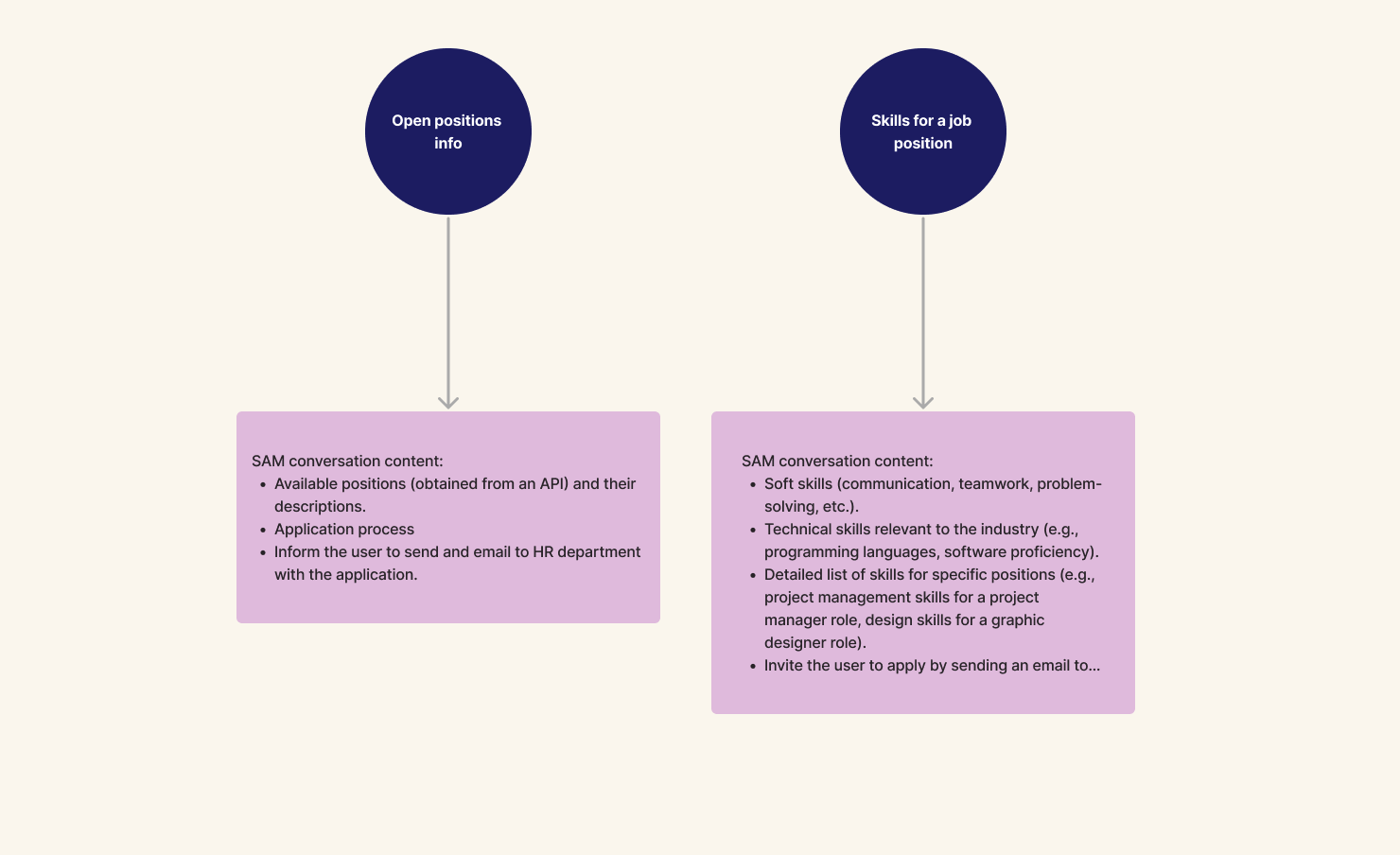

1. Identification of an Unexpected User Group:

We discovered a segment of users who visited the site primarily to explore job opportunities. These users engaged with SAM to inquire about available positions, salary information, and tips for preparing for technical interviews.

Actions Taken: We created a detailed user persona for this group, mapped out potential conversational flows, and developed data sources to provide relevant information.

2. Welcome Message Requirement:

There was a clear need for a welcome message to help users understand how SAM could assist them. Despite this, stakeholders decided against adding the message.

3. Enhanced Visual Aids for Navigation:

Users required more visual aids to better navigate the site. Heatmap data revealed that users were searching the entire site for clickable elements, indicating confusion about navigation.

Future Actions: This behavior was anticipated, and thus, it highlighted the need for improved visual guidance.

GOALS ACHIEVED

LESSONS LEARNED

- Integrating a Large Language Model (LLM) into a chatbot involves more than simply switching from one tool to anotherr. Since an LLM is fundamentally different from traditional tools, the entire development paradigm shifts, requiring new approaches to problem-solving.

- Familiarity with LLM-related concepts is crucial for navigating the challenges that arise during the design and development of the chatbot.

FUTURE IMPROVEMENTS

- Enhanced Visual Content: Incorporating more visual content can enhance the user experience by making interactions smoother and reducing cognitive load.

- Automated Interaction Testing: Systematizing the testing process through scripted interactions is essential to ensure consistent performance and identify potential issues early.

FINAL THOUGHTS

- A chatbot powered by an LLM is not a one-size-fits-all solution for all conversational products. It is important to analyze the objectives of the chatbot and user needs to determine if a generative tool is the best option.